Santé et médecine

Generative AI is this CISO’s ‘really eager intern’

Editor’s note: This is part two of a two-part interview on AI and cybersecurity with David Heaney from Mass General Brigham. To read part one, click here. In the first installation of this deep-dive interview, Mass General Brigham Chief Information Security Officer David Heaney explained defensive and offensive uses of artificial intelligence in healthcare. He said

Medecine

Editor’s note: This is part two of a two-part interview on AI and cybersecurity with David Heaney from Mass General Brigham. To read part one, click here.

In the first installation of this deep-dive interview, Mass General Brigham Chief Information Security Officer David Heaney explained defensive and offensive uses of artificial intelligence in healthcare. He said understanding the environment, knowing where one’s controls are deployed and being great at the basics is much more critical when AI is involved.

Today, Heaney lays out best practices healthcare CISOs and CIOs can employ for securing the use of AI, how his team uses them, how he gets his team up to speed when it comes to securing with and against AI, the human element of AI and cybersecurity, and types of AI he uses to combat cyberattacks.

Q. What are some best practices that healthcare CISOs and CIOs can employ for securing the use of AI? And how are you and your team using them at Mass General Brigham?

A. It’s important to start with the way you phrase that question, which is about understanding that these AI capabilities are going to drive amazing changes in how we care for patients and how we discover new approaches and so much more in our industry.

It really is about how we support that and how we help to secure that. As I mentioned in part one, it’s really important to make sure we’re getting the basics right. So, if there’s an AI-driven service that uses our data or is being run in our environment, we have the same requirements in place for risk assessments, for business associate agreements, for any other legal agreements we’d have with non-AI services.

Because at some level we’re talking about another app, and it needs to be controlled just like any other apps in the environment, including restrictions against using unapproved applications. And none of that’s to say there aren’t AI-specific considerations we would want to address, and there’s a few that come to mind. In addition to the standard legal agreements I just mentioned, there certainly are additional data use considerations.

For example, do you want your organization’s data to be used to train your vendor’s AI models downstream? The security of the AI model itself is important. Organizations need to consider options around continuous validation of the model to ensure it is providing accurate outputs in all scenarios, and that can be part of the AI governance I mentioned in part one.

There’s also adversarial testing of the models. If we put in bad input, does it change the way the output comes out? And then one of the areas of the basics I’ve actually seen changing a little bit in terms of its importance in this environment is around the ease of adoption of so many of these tools.

An example there: Look at meeting note-taking services like Otter AI or Read AI, and there’s so many others. But these services, they’re incentivized to make adoption simple and frictionless, and they’ve done a great job at doing that.

While the concerns around the use of these services and the data they can get access to and things like that doesn’t change, the combination of the ease of adoption by our end users, and frankly, just the cool factor of this and some other applications, really makes it an important area to focus on how you’re onboarding different applications, especially AI-driven applications.

Q. How have you been getting your team up to speed when it comes to securing with and against AI? What’s the human element at play here?

A. It’s huge. And one of my top values for my security team is curiosity. I would argue it’s the single skill behind everything we do in cybersecurity. It’s the thing where you see something that’s a little bit funny and you say, « I wonder why that happened? » And you start digging in.

That’s the start of virtually every improvement we make in the industry. So, to that end, a huge part of the answer is having curious team members who get excited about this and want to learn about it on their own. And they just go out and they play with some of these tools.

I try to set an example in the area by sharing how I’ve used the various tools to make my job easier. But nothing replaces that curiosity. Within MGB, within our digital team, we do try to dedicate one day a month to learning, and we provide access to a variety of training services with relevant content in the space. But the challenge with that really is the technology changes faster than the training can keep up with.

So really nothing replaces just going out and playing with the technology. But also, perhaps with a little bit of irony, one of my favorite uses for generative AI is for learning. And one of the things I do is I use a prompt where it says something like, « Create a table of contents for a book titled X, where X is whatever topic I want to learn about. » And I also usually specify a little bit about what the author is like and the purpose of the book.

That creates a great outline of how to learn about that topic. And then you can either ask your AI friend, « Hey, can you expand on chapter one? And what does that mean? » Or potentially go to other sources or other forums to find the relevant content there.

Q. What are some types of AI you use, without giving away any secrets, of course, to combat cyberattacks? Perhaps you could explain in broader terms how these types of AI are meant to work and why you like them?

A. Our overall digital strategy at MGB is really focused on leveraging platforms from our technology vendors. Picking up a little bit from part one’s vendor question, our focus is working with these companies to develop the most valuable capabilities, many of which are going to be AI-driven.

And just to give a picture of what that looks like, at least in general terms, to not give away the golden goose, so to speak, our endpoint protection tools use a variety of AI algorithms to identify potentially malicious behavior. They then all send logs from all of these endpoints to a central collection point where there’s a combination of both rules-based and AI-based analysis that looks for broader trends.

So not just on one system, but across the entire environment. Are there trends indicative of maybe some elevated risk? We have an Identity Governance Suite, and that’s the tooling that’s used to provision access to grant and remove access in the environment. And that suite of tools has various capabilities built in to identify potential risk and see access combinations that might already be in place or even look at access requests as they come in to prevent us from granting that access in the first place.

So that’s the world of the platforms themselves and the technology that’s built in. But beyond that, going back to how we can use generative AI in some of these areas, we use that to accelerate all kinds of tasks we used to do manually.

The team has gotten, I couldn’t put a number on it, but I’ll say tons of time savings by using generative AI to write custom scripts for triage, for forensics, for remediation of systems. It’s not perfect. The AI gets us, I don’t know, 80% complete, but our analysts then finalize the script and do so much more quickly than if they were running it or creating it from the beginning.

Similarly, we use some of these AI tools to create queries that go into our other tools. We get our junior analysts up to speed much faster by letting them have access to these tools to help them more effectively use the various other technologies we have in place.

Our senior analysts are just more efficient. They already know how to do a lot of this, but it’s always better to start from 80% than to start from zero.

In general, I describe it as my really eager intern. I can ask it to do anything and it’ll come back with something between a really good starting point and potentially a great and complete answer. But I certainly wouldn’t go and use that answer without doing my own checks and finishing it first.

CLICK HERE to watch the video of this interview that contains BONUS CONTENT not found in this story.

Editor’s Note: This is the tenth and final in a series of features on top voices in health IT discussing the use of artificial intelligence. Read the other installments:

-

Dr. John Halamka of Mayo Clinic Platform

-

Dr. Aalpen Patel of Geisinger

-

Helen Waters of Meditech

-

Sumit Rana of Epic

-

Dr. Rebecca G. Mishuris of Mass General Brigham

-

Dr. Melek Somai of the Froedtert & Medical College of Wisconsin Health Network

-

Dr. Brian Hasselfeld of Johns Hopkins Medicine

-

Craig Kwiatkowski of Cedars-Sinai

-

Dr. Bruce Darrow of Mount Sinai Health System

Follow Bill’s HIT coverage on LinkedIn: Bill Siwicki

Email him: [email protected]

Healthcare IT News is a HIMSS Media publication.

The HIMSS Healthcare Cybersecurity Forum is scheduled to take place October 31-November 1 in Washington, D.C. Learn more and register.

Général

Le Rotary investit 2 millions de dollars pour la santé maternelle : le président de la Fondation en visite au Nigeria pour explorer de nouvelles opportunités

Erreur 403 : Accès refusé !

Vous êtes face à un mur numérique. Impossible d’accéder à ce document, et la frustration monte. Que faire dans ces moments-là ? Ne paniquez pas ! Voici quelques solutions simples :

- Cliquez sur « Recharger la page »

- Passez à la page précédente

- Retournez à l’accueil

N’oubliez pas, chaque obstacle est une opportunité d’explorer de nouvelles voies !

Erreur de Serveur : Accès Refusé

Vous n’avez pas l’autorisation d’accéder à ce document.

Voici quelques actions possibles :

Rafraîchir la page

Retourner à la page précédente

Accéder à la page d’accueil

Santé et médecine

Les refuges d’Ottawa : Dîners festifs et cadeaux pour les résidents pendant les fêtes !

Le jour de Noël, les Pasteurs de la Bonne Espérance ont accueilli des centaines de personnes pour un repas festif chaleureux. Azery Sharrons, un homme de 70 ans vivant au refuge, a exprimé sa gratitude pour ce repas qui lui rappelle des souvenirs d’enfance. Husa Delice, un bénévole dévoué depuis 2022, a souligné l’importance de cette communauté où les sourires et la chaleur humaine règnent. Ce Noël n’était pas seulement une fête; c’était une occasion d’unir ceux qui en ont besoin et d’apporter un peu de réconfort dans leurs vies difficiles.

Célébrations de Noël au Refuge des Bons Samaritains

un repas chaleureux pour les plus démunis

Le jour de Noël, le Refuge des Bons samaritains a accueilli un grand nombre de personnes pour un repas fait maison. Les organisateurs s’attendaient à servir plusieurs centaines de convives tout au long de la journée.

L’engagement des bénévoles

Husa Delice, bénévole depuis 2022, a partagé son expérience en disant que cet endroit était « merveilleux » et lui avait permis de rencontrer des gens formidables. Il a remarqué que les membres de la communauté étaient »heureux » et « sourire aux lèvres ».

Une atmosphère conviviale malgré le froid

Delice a souligné l’importance d’offrir chaleur et réconfort aux personnes dans le besoin pendant cette période froide. Azery Sharrons, un homme âgé de 70 ans vivant dans une zone d’accueil du refuge, a exprimé sa gratitude pour le repas copieux qui rappelait les traditions festives.

Activités post-Noël au refuge

Le lendemain du jour de Noël, le refuge prévoit d’accueillir un groupe de chanteurs qui viendront égayer l’après-midi avec des chants traditionnels. Des dons tels que vêtements chauds et produits d’hygiène seront également distribués aux visiteurs.

Besoins matériels essentiels

Les dons comme tasses, assiettes et couverts sont cruciaux pour la cuisine communautaire. De plus, les couvertures et oreillers sont très appréciés par ceux qui séjournent au refuge.

Élargissement des festivités

En parallèle à ces activités sur leur site principal rue Murray, l’équipe du Refuge des Bons Samaritains s’est rendue dans cinq résidences offrant un logement soutenu afin d’y servir également le dîner traditionnel ainsi que distribuer des cadeaux.

Préparatifs minutieux en cuisine

Peter Gareau, responsable du service alimentaire au refuge, a expliqué qu’une préparation intensive est nécessaire avant Noël.Bien qu’il ait initialement craint ne pas avoir assez de dindes à cause du nombre élevé attendu, il a été soulagé par la générosité locale qui lui a permis d’obtenir suffisamment pour nourrir tous les invités durant toute la semaine.

Gareau souligne aussi que ces repas festifs peuvent raviver chez certains clients des souvenirs heureux liés à leur enfance : « Personne ne choisit ce mode de vie », dit-il en espérant que cela puisse inciter certains à participer aux programmes offerts par l’établissement.

Autres initiatives communautaires

D’autres organisations ont également organisé leurs propres célébrations durant cette période festive.Cornerstone Housing for Women par exemple avait prévu une collecte communautaire permettant la création de 321 sacs-cadeaux destinés aux résidents en situation précaire.

Chris O’Gorman mentionne que ces gestes montrent clairement aux bénéficiaires qu’ils sont soutenus par leur communauté. En collaboration avec Restaurant 18, ils ont préparé plusieurs centaines de plats traditionnels pour ceux vivant dans leurs installations.

Dons inattendus

De son côté Peter Tilley, directeur général du Ottawa Mission (Mission d’Ottawa), raconte comment ils ont reçu plus de 200 dons provenant d’un groupe appelé Backpacks for the Homeless (Sacs à dos pour sans-abri). Ces paquets contenaient divers articles allant des chaussettes jusqu’à une carte-cadeau Tim Hortons.

Tilley ajoute qu’ils ont ouvert leur chapelle toute la journée afin que ceux qui se sentent seuls puissent regarder tranquillement quelques films classiques tout en profitant ainsi d’un moment réconfortant pendant cette période difficile.

Santé et médecine

Les étoiles de Downton Abbey : que sont-elles devenues depuis la fin de la série ?

Il y a presque neuf ans, Downton Abbey a tiré sa révérence sur ITV, mais l’héritage de cette série emblématique perdure. Grâce à deux films et un troisième en cours de tournage, les fans peuvent retrouver leurs personnages préférés. Hugh Bonneville, Michelle Dockery et Joanne Froggatt ont continué à briller sur nos écrans avec des rôles captivants. Découvrez ce que ces acteurs talentueux ont accompli depuis la fin de la série et comment ils ont évolué au fil des années. La magie de Downton Abbey n’est pas prête de s’éteindre !

Medecine

Le temps passe vite, cela fait déjà neuf ans que la série Downton Abbey a pris fin sur ITV. Heureusement,cette franchise a donné naissance à deux films,et un troisième a été tourné cet été.

Depuis la conclusion de la série, nous avons eu le plaisir de voir les acteurs reprendre leurs rôles emblématiques, bien que quelques exceptions notables soient à signaler. Bien que nous n’ayons jamais vraiment dit adieu aux personnages, beaucoup de choses ont évolué pour Hugh Bonneville, Michelle Dockery, Joanne Froggatt et d’autres depuis leur passage dans l’émission. Voici un aperçu de ce qu’ils ont réalisé au cours des presque dix dernières années…

### Hugh Bonneville : Un parcours diversifié

Hugh Bonneville est reconnu pour son interprétation du comte Robert Crawley. Après la fin de Downton Abbey, il a continué à briller sur nos écrans dans la franchise Paddington, tout en décrochant des rôles dans des productions telles que I Came By (2022), Bank of Dave (2023) et le prochain film intitulé douglas is Cancelled, prévu pour 2024.

Cet été, Hugh a également participé au tournage du troisième film de la saga Downton Abbey qui n’a pas encore reçu son titre officiel.

### Michelle Dockery : Des rôles audacieux

Michelle Dockery continue d’incarner Lady Mary Crawley avec brio tout en s’attaquant à des rôles plus sombres et complexes. Parmi ses récentes apparitions télévisées figurent les séries godless (2017), Anatomy of a Scandal (2022) et le futur projet intitulé This Town, prévu pour 2024. Au cinéma, elle est apparue dans des films tels que The Gentlemen (2019), ainsi que dans les productions récentes comme Boy kills World (2023) et le très attendu film < em >Flight Risk (2024).

### Joanne Froggatt : Une carrière florissante

Joanne Froggatt est devenue célèbre grâce à son rôle d’Anna Bates dans la série acclamée ainsi que dans ses adaptations cinématographiques. Depuis l’arrêt du show en 2015, elle a obtenu des premiers rôles dans plusieurs séries télévisées telles que < em >Liar (2017-20), < em >Angela Black (2021) et < em >Breathtaking (prévu pour 2024). Récemment confirmée pour participer à une nouvelle série criminelle sans titre réalisée par Guy Ritchie aux côtés de Tom Hardy et Pierce brosnan.

### Jessica Brown findlay : Une étoile montante

Jessica Brown Findlay s’est fait connaître en incarnant Lady Sybil Branson avant de quitter Downton Abbey en 2012. Depuis lors, elle a joué dans plusieurs productions notables comme < em >jamaica Inn (2014), < em >Harlots (2017-19), ainsi qu’< emp >Brave New World (2020). Son dernier projet inclut une apparition prévue dans la série télévisée Playing Nice avec James Norton qui sortira le 5 janvier prochain.

### Laura Carmichael : L’artiste polyvalente

Laura Carmichael s’est illustrée par sa performance remarquable en tant qu’Edith Crawley. elle continue d’apporter sa touche unique au petit écran avec notamment son rôle marquant en tant que Margaret Pole dans < emp>The Spanish princess (2019-20) ou Agatha dans < emp>The Secrets She Keeps .

### Jim Carter : Un acteur prolifique

Jim Carter ne chôme pas ! après l’arrêt de Downton Abbey , il est apparu sur scène notamment avec sa participation remarquée à king Lear (2018) ou encore The Good Liar (2019). Plus récemment il était présent au cinéma avec The Sea Beast (2022) puis Wonka (prévu pour 2023).

### Penelope Wilton : Une carrière impressionnante

Penelope Wilton est célébrée pour son interprétation d’Isobel Gray .Sa filmographie comprend diverses œuvres telles que The Guernsey Literary and Potato Peel Pie Society(2018), After Life(2019-22 ), Operation Mincemeat( prévus cette année )et The Unlikely Pilgrimage of Harold fry( également prévu cette année ).

### Hommage à Dame Maggie Smith

Maggie Smith brillait sous les traits puissants de Violet Crawley , Comtesse douairière de Grantham . En plus d’avoir repris ce rôle iconique lors des films suivants,

Parmi ses nombreux projets récents figurent Sherlock Gnomes(18 ), A Boy Called Christmas(en21 )etThe Miracle Club(en23 ). Malheureusement,maggie nous a quittés le27 septembre dernierà l’âgede89 ans,soulevant une vagued’hommages émouvantsde lamisde Downtown.

-

Business1 an ago

Business1 an agoComment lutter efficacement contre le financement du terrorisme au Nigeria : le point de vue du directeur de la NFIU

-

Général2 ans ago

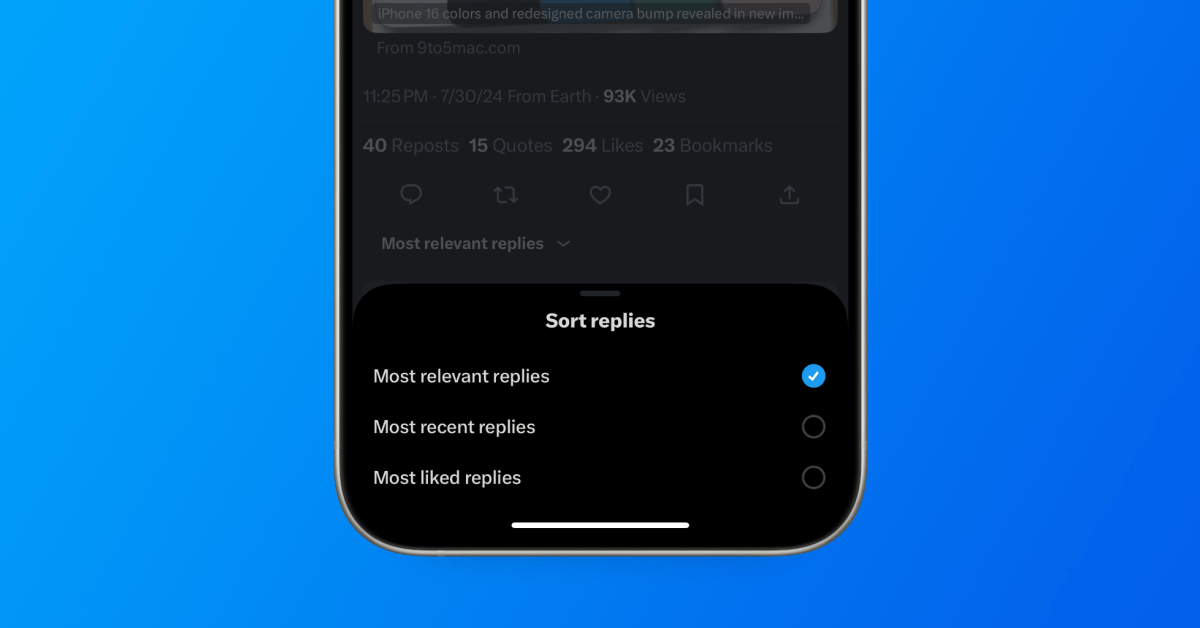

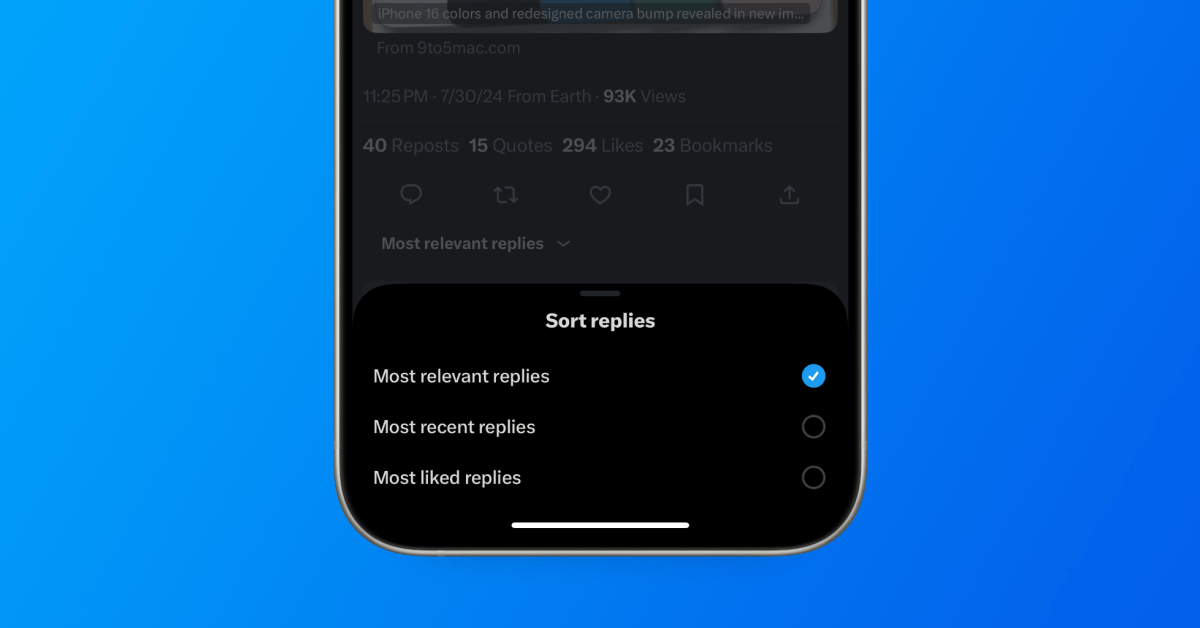

Général2 ans agoX (anciennement Twitter) permet enfin de trier les réponses sur iPhone !

-

Technologie1 an ago

Technologie1 an agoTikTok revient en force aux États-Unis, mais pas sur l’App Store !

-

Général1 an ago

Général1 an agoAnker SOLIX dévoile la Solarbank 2 AC : la nouvelle ère du stockage d’énergie ultra-compatible !

-

Général1 an ago

Général1 an agoLa Gazelle de Val (405) : La Star Incontournable du Quinté d’Aujourd’hui !

-

Sport1 an ago

Sport1 an agoSaisissez les opportunités en or ce lundi 20 janvier 2025 !

-

Business1 an ago

Business1 an agoUne formidable nouvelle pour les conducteurs de voitures électriques !

-

Science et nature1 an ago

Science et nature1 an agoLes meilleures offres du MacBook Pro ce mois-ci !